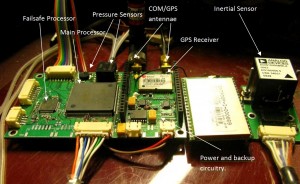

I’ve recently been doing a lot of interrupt-based software development on the Perseus Autopilot. There’s quite a few things that need to happen Asynchronously on the Autopilot including sensor interfaces, control loops and communication/actuation loops. This can result in many interrupts that can sometimes even trigger at the same time. So how does one deal with all of this in an application? It might be worthwhile to first get into the details of interrupts, and then I will list a few pointers that could help you in your projects, if you are writing heavily interrupt-base code.

Normally, the code you write in C++ or C and most other languages that would run on a microcontroller (with the exception of assembly), will allow you to create, modify and work with variables. These language will also allow you to call functions, and in case of object oriented languages such as C++, allow you to create objects or classes. It is therefore important to get a good grasp of how these variables and objects are represented in the memory that is available to you (usually some form of RAM). The two sections of memory that we are interested in are the stack and the heap. This article is an good place to get some information about these memories. What is important to us, is what happens to these memories when an interrupt occurs. If an interrupt occurs during a function execution, the processor will stop what it was doing in that function, and depending on the type of processor will usually divert to an interrupt vector which will then call the ISR or interrupt service routine. Once the ISR has finished, the processor will return to where it left off in the execution. Note that the state of the processor before it left the execution must be saved, and restored once the ISR is completed. This will ensure any pending operations will continue correctly one execution resumes.

Variables that are used in the ISR will be allocated on a separate stack. This stack needs to be allocated in memory that cannot be used by the normal thread of execution in most states. The state of the processor before the interrupt is also pushed onto this stack. Since we are operating in an environment without an OS, everything runs in the same memory space. It is therefore very important to allocate enough heap and stack memory for your application as exceeding these memories will cause undesirable and unpredictable problems.

Things can go wrong in several cases with ISR programming, and these errors are often extremely hard to debug. One scenario is if a variable is accessed from within an ISR and from within normally executed code at the same time. In this case, if the variable is not accessed atomically, the processor can be interrupted half-way through a read/write operation on the variable. If the ISR then modifies the variable, when execution returns, the value of the variable that will be used can become invalid. This can happen if global/static variables are modified from within and outside of an ISR simultaneously.

With that in mind, these are a couple of tips to help with developing interrupt-based systems without the use of an operating system:

- Plan Ahead

Draw your functions or write them in pseudo-code. Find out exactly what you need in the ISR and do everything else to the normal execution thread. It might also be worthwhile to list all the functions that the application must do, and deciding which ones are time-critical and therefore need handling via ISR. Everything else can be done via polling in the main thread. Figure out when your interrupts are likely to happen, and what variables they access/modify.

- Nested Interrupts are Extremely Nasty

It is generally a good idea to disable all interrupts once an ISR is entered. Even though it might seem logical that ISRs should be “interruptable”, it will make writing secure and reliable code extremely difficult. The simultaneous execution of multiple interrupts can rapidly fill the stack allocated to the interrupts. Once the ISR has finished, interrupts can be enabled and in most processors, the corresponding ISR will fire if an interrupt happened.

- Keep It Short. Very Short

Lengthy ISRs can create problems as they will halt the execution of your main thread at potentially unpredictable and lengthy intervals. If nested interrupts are disabled (which they should be), lengthy ISRs can also reduce the speed at which your processor can respond to interrupts. Start the ISR, get what you need and get out. Do all the processing in the main thread unless you absolutely must do otherwise.

- Don’t Call Functions

Unless you really have to. Calling functions in an ISR will lead to the arguments and variables being pushed into the ISR stack which will increase its size. If functions that are called from within an ISRs are also called from the mean thread, it can lead to issues if the function is not designed as a re-entrant function. This means that the variables that the function declares, should be locally instantiated and specific to the execution context. Here is a good article on writing re-entrant functions.

- Use Volatile and Atomic Variables

If you are accessing variables both from within and outside and ISR, declaring variables with the volatile keyword will make sure that the compiler does not use any optimisations in the code. This means that the code will run just as you wrote it, which is not always the case as the compiler makes many optimisations to increase performance. Volatile, in-built variables are generally atomic in their access (except for arrays) but it pays to always check this with your compiler. If you are accessing arrays or objects, you can make them atomic by disabling interrupts when you access them from the main thread. This will make sure that they cannot be modified by the ISR whilst your are working with them in the main thread. In this critical section you can them copy the data from the volatile variables to local ones which the ISR doesn’t access, and re-enable the interrupts.

If you can think of any more, drop me a line/comment so I can update this.